8x GB200 NVL72 Cluster with 1.6T OSFP InfiniBand Architecture

Complete Bill of Materials and Technical Specifications for AI/HPC Workloads、

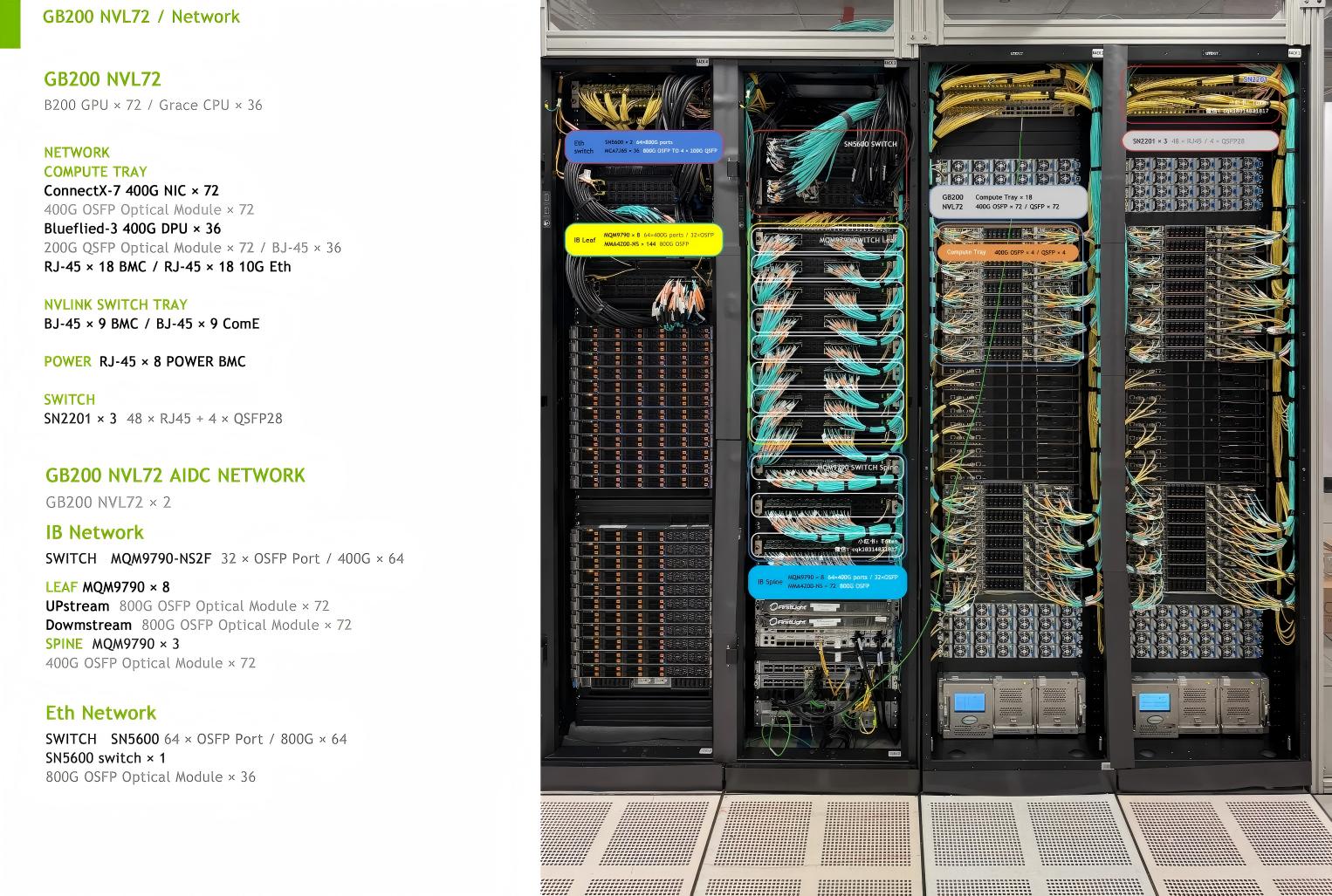

This solution provides a fully optimized InfiniBand networking architecture for 8 GB200 NVL72 supercomputing nodes, designed for AI training, scientific simulations, and high-performance computing workloads. The implementation features a two-tier leaf-spine topology with 1:1 non-blocking design, utilizing 1.6T OSFP optical modules for switch-to-switch connectivity and 400G OSFP modules for server-to-leaf connections.

Network Topology

Architecture: Two-tier leaf-spine InfiniBand fabric

Oversubscription Ratio: 1:1 (non-blocking)

Total Compute Nodes: 8 × GB200 NVL72 systems

Total GPU Count: 576 GPUs (72 per system)

Fabric Bandwidth: 1.6T per link, aggregate 12.8T bisection bandwidth

Leaf Layer: 3 × NVIDIA Q3400-RA switches (2 minimal, 3 recommended)

Spine Layer: 1 × NVIDIA Q3400-RA switch

Server Connectivity: 400G ConnectX-7 NICs to leaf switches

Switch Connectivity: 1.6T OSFP links between leaf and spine

Total Switch Ports: 576 × 400G server ports + 216 × 1.6T fabric ports

Optical Transceiver Requirements

| Component Type | Specification | Quantity | Unit Location | Total Ports |

|---|---|---|---|---|

| 1.6T OSFP InfiniBand Transceiver | 1.6T OSFP, HDR InfiniBand, 0-100m over SMF | 216 | Switch-to-switch links | 216 ports |

| Leaf Layer (Uplink) | 1.6T OSFP to Spine switches | 72 | 3 Leaf switches × 24 uplinks | 72 ports |

| Leaf Layer (Downlink) | 1.6T OSFP to Spine switches | 72 | 3 Leaf switches × 24 downlinks | 72 ports |

| Spine Layer | 1.6T OSFP to Leaf switches | 72 | 1 Spine switch × 72 ports | 72 ports |

| 400G OSFP InfiniBand Transceiver | 400G OSFP, NDR InfiniBand, 0-100m over SMF | 576 | Server-to-leaf connections | 576 ports |

| ConnectX-7 NIC Transceivers | 400G OSFP, per NIC requirement | 576 | 72 NICs × 8 servers | 576 ports |

Switch & NIC Hardware Requirements

| Component Type | Model/Specification | Quantity | Configuration Details |

|---|---|---|---|

| InfiniBand Leaf Switches | NVIDIA Q3400-RA (36-port 1.6T OSFP) | 3 | 24 downlinks to servers, 12 uplinks to spine (per switch) |

| InfiniBand Spine Switch | NVIDIA Q3400-RA (36-port 1.6T OSFP) | 1 | 72 ports total (3×24 from leaf switches) |

| 400G InfiniBand NICs | NVIDIA ConnectX-7 VPI (400G OSFP) | 576 | 72 per GB200 system, 8 systems total |

| InfiniBand Cables | MTP/MPO-24 to 6×LC duplex, 5-30m | 216 | For 1.6T OSFP connections (24 fibers per cable) |

| 400G DAC/AOC Cables | 400G OSFP to OSFP, 3-5m | 576 | Alternative to optical for short reaches (<5m) |

| Optical Fiber Panels | 96-port LC duplex, 1RU | 6 | For structured fiber management |

Leaf Layer Configuration (3 Switches)

Switch Model: NVIDIA Q3400-RA with 36 × 1.6T OSFP ports

Server Connections: 24 × 400G downlinks per switch (connects to 24 ConnectX-7 NICs)

Spine Connections: 12 × 1.6T uplinks per switch (connects to spine switch)

Total Port Utilization: 24 + 12 = 36 ports (fully utilized)

Connectivity per Leaf: Each leaf connects to 8 GB200 nodes (3 leaves × 8 = 24 nodes capacity)

Switch Model: NVIDIA Q3400-RA with 36 × 1.6T OSFP ports

Leaf Connections: 72 × 1.6T downlinks (24 from each of 3 leaf switches)

Port Utilization: 72 ports used on spine switch

Redundancy: Optional second spine for N+1 redundancy (adds 72 more 1.6T transceivers)

NICs per Server: 72 × ConnectX-7 400G VPI adapters

Transceivers per Server: 72 × 400G OSFP InfiniBand optical modules

Cabling per Server: 72 × fiber connections to leaf switches

Port Mapping: Each server connects to all 3 leaf switches (24 ports per leaf)

Bandwidth per Server: 400G × 72 = 28.8Tbps theoretical per server

Network Performance Metrics

Bisection Bandwidth: 12.8 Tbps full non-blocking capacity

Latency: < 600ns switch-to-switch, < 1μs end-to-end

Message Rate: 200 million messages per second per port

Fabric Bandwidth: 1.6T per link, aggregate 345.6T across fabric

GPU-to-GPU Bandwidth: 400G per GPU, full bisection bandwidth

| Performance Metric | Specification | Value | Industry Comparison |

|---|---|---|---|

| Switch ASIC Bandwidth | Q3400 switch capacity | 25.6 Tbps | Industry-leading for HDR InfiniBand |

| Port Speed | 1.6T OSFP interface | 1.6 Tbps (200 GB/s) | Next-generation beyond 800G |

| Fabric Latency | End-to-end latency | < 1 μs | Optimal for AI training synchronization |

| Power per Port | 1.6T OSFP transceiver | 18-22W | Efficient for high-density deployments |

| Cooling Requirement | Per switch chassis | 3-5 kW | Liquid cooling recommended |

1.6T OSFP InfiniBand Transceiver Details

Form Factor: OSFP (Octal Small Form Factor Pluggable)

Data Rate: 1.6 Tbps (8 × 200G lanes)

Protocol: HDR InfiniBand (600G per lane effective)

Reach: 0-100m over OM4 MMF, 0-2km over SMF

Wavelength: 850nm VCSEL for MMF, 1310nm for SMF

Power Consumption: 18-22W typical

Operating Temperature: 0°C to 70°C commercial

Compatibility: NVIDIA Q3400-RA switches, Quantum-2 ASIC

Form Factor: OSFP (Octal Small Form Factor Pluggable)

Data Rate: 400 Gbps (8 × 50G lanes)

Protocol: NDR InfiniBand (400G per port)

Reach: 0-100m over OM4 MMF, 0-2km over SMF

Wavelength: 850nm VCSEL for MMF, 1310nm for SMF

Power Consumption: 10-12W typical

Operating Temperature: 0°C to 70°C commercial

Compatibility: NVIDIA ConnectX-7 NICs, Quantum-2 ASIC

Alternative Deployment Scenarios

Minimal Configuration: 2 Leaf + 1 Spine

Redundant Configuration: 3 Leaf + 2 Spine (N+1)

All-To-All Configuration: Direct ConnectX-7 to Spine

Multi-rail Configuration: Dual NICs per GPU

Extended Reach: SMF transceivers for >100m

Cost Optimized: 400G DAC for <3m connections

| Configuration | Transceiver Count | Cost Impact | Performance Impact | Recommended Use |

|---|---|---|---|---|

| Minimal (2 Leaf) | 144 1.6T + 576 400G | -25% switch cost | 2:1 oversubscription | Budget-constrained AI training |

| Recommended (3 Leaf) | 216 1.6T + 576 400G | Baseline | 1:1 non-blocking | Production AI/HPC clusters |

| Redundant (3+2) | 288 1.6T + 576 400G | +33% switch cost | N+1 fault tolerance | Mission-critical workloads |

| Dual-rail (2×NIC) | 216 1.6T + 1,152 400G | +100% NIC cost | 2× bandwidth per GPU | Extreme performance requirements |

Critical Implementation Factors

Thermal Management: 1.6T OSFP transceivers generate significant heat (18-22W each); ensure adequate cooling

Power Requirements: Each Q3400-RA switch consumes 3-5kW; plan power distribution accordingly

Cable Management: 576 fiber connections require structured cabling and proper bend radius protection

Compatibility Testing: All optical transceivers must be validated with NVIDIA switches and NICs

Firmware Management: Consistent firmware levels across all switches and NICs for optimal performance

Monitoring & Management: Implement NVIDIA UFM or similar for fabric management and monitoring